Unlocking Creative Control with IPAdapter

Putting the latest image generation technology to work in our new campaign

At Addition we’ve been designing AI systems that leverage one or more image generation steps for a while, and oftentimes it’s a bit of a headache. Diffusion models like Stable Diffusion and Midjourney are great, but oftentimes the output is a bit of a crap shoot – a slot machine that delights but also disappoints.

When it comes to many advertising and marketing use cases, this unreliability poses major issues. Sure, it could be great for brainstorming and storyboards. But when it came to representing actual products or people in specific scenes AI wasn’t ready for the task.

Recent advances in image generation models have been making headway in this area, and the open-source community that’s been building around Stable Diffusion has been chipping away at getting these models to behave in a controllable manner.

With the recent release of IPAdapter, AI takes a big step towards fine-grained control of generated imagery, and with it a slew of new use cases becomes possible.

First, let’s break down IPAdapter and how it works, and then we’ll take a look a recent project where we deployed it at scale.

What is IPAdapter?

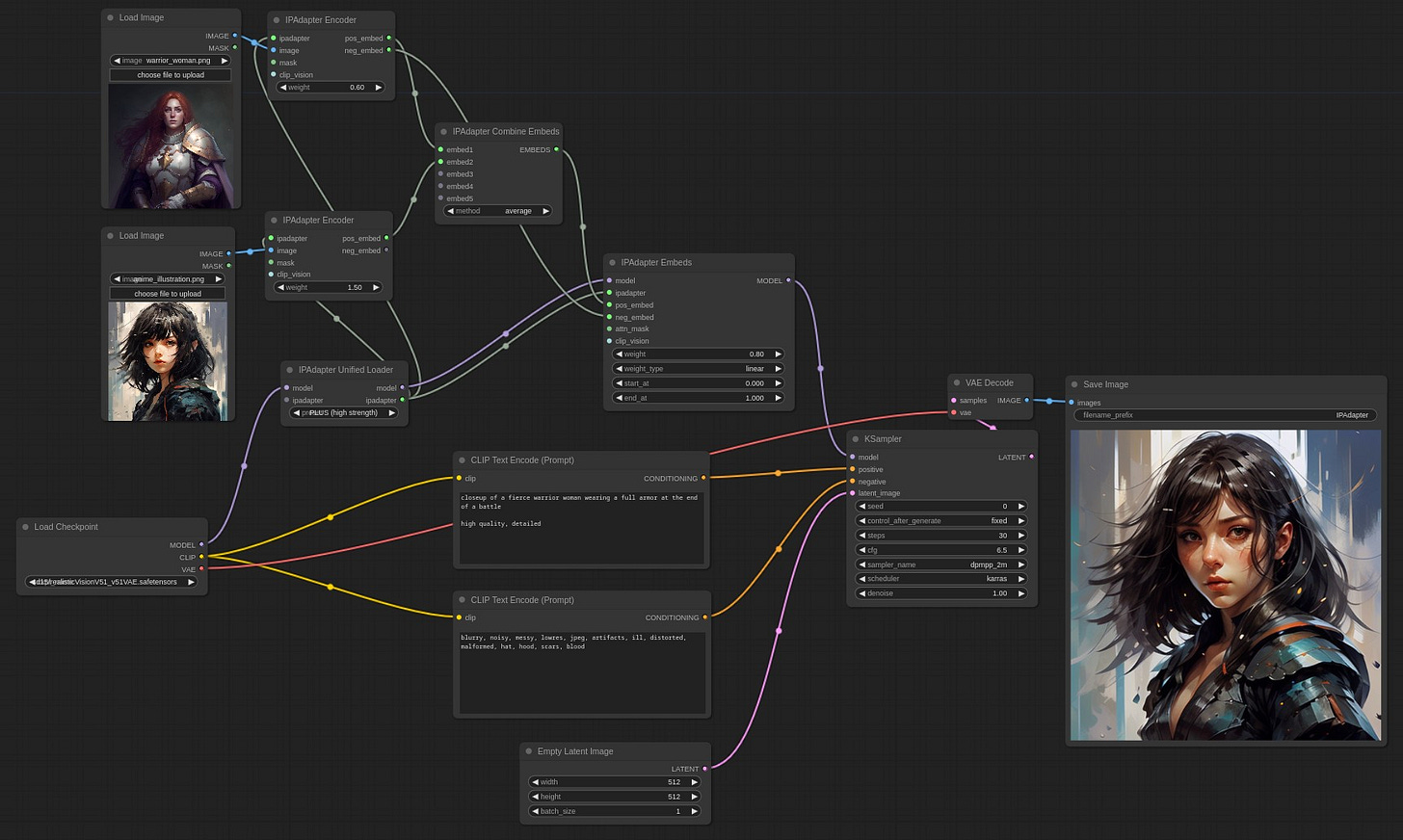

IPAdapter is a lightweight model that piggybacks on image models like Stable Diffusion. You can read the paper on Github if you want to get into the details, but the long and short of it is that it uses image to image prompting in a new way that enables much more fine grained control than a text prompt alone. As the old saying goes, a picture is worth a thousand words. And in this case, it means fine grained control of image outputs.

What’s nice about IPAdapter is that it compliments other technologies for controlling image generation. For example, you can combine it with a fine tuned model to generate in a specific style.

Or you can combine it with a control model to generate based on a specific shape, sketch, or pose.

Realtor.com Case Study: Turning Your Home Into Art

Earlier this month we used these techniques to launch a campaign for Realtor.com that encouraged homeowners to ‘claim their home’ on the platform.

In just a few clicks you can turn your home into artwork using a handful of pre-trained style pipelines that we set up.

Each pipeline uses a subset of AI generated ‘style images’ that get fed to IPAdapter along with an image of the user’s home.

By modifying the style image and adjusting parameters we create highly controlled image pipelines that are capable of turning your home into a wide range of art styles.

Simply choose a picture of your home, pick a style, and watch it get transformed into something that’s completely new, but still recognizable as your home.

If you’re curious to try this out and don’t feel like installing ComfyUI on your machine you can visit Realtor.com/myhome between now and June 30th to turn your home into a digital portrait.

🙌 Shout out to our friends at Realtor.com’s Brand Bureau for designing and developing the landing page for this campaign.

Addition is an applied AI R&D lab using generative models to solve problems for brands and people.

🤔 Have a use case you’re interested in exploring? Feel free to get in touch.

🎉 Humble brag: Our new website is live at Addition.ml.

🤖 Sign up to join for our State of AI Talk in LA on June 10th.