Fine-tuning Models for Creativity

A new approach to fine-tuning focused on generalized creative thinking, rather than repetitive tasks.

I’m fascinated by the concept of machine creativity. How is it that technologies trained only on content from the past are capable of generating new ideas? It’s a bit of a philosophical question (and one with its share of skeptics), but what I saw from our early experiments was that, with the right engineering techniques, AI was capable of generating first drafts rivaling those I’d expect to see from seasoned creatives.

By now, we’ve been exploring foundation models’ potential to accomplish creative tasks for several years.

You can read about those early experiments and play around with a demo here.

As the technology has become more powerful, we’ve been able to create more intricate systems that leverage agentic behaviors and function calling. These days, many of our projects involve dozens of AI functions working in harmony to execute tasks that might otherwise have been impossible to pull off. But as AI has become more factually accurate and generalized, its creative superpowers haven’t kept pace. Anyone who’s tried to get ChatGPT to write creatively knows what I’m talking about.

Creative Fine-tuning 101

A big part of this comes down to fine-tuning. Frontier models like GPT-4, Claude, and Gemini are fine-tuned by their creators to accomplish a wide range of tasks, from helping you code a website to correctly giving you a history lesson on a subject of your choosing. It’s important that these models are detail-oriented, factual, politically correct, and free from opinions, but these types of behaviors are at odds with creativity. It also happens that in the process of conditioning these models for accuracy, they lose touch with their creativity. Some people in the AI community go so far as to compare this process to a lobotomy.

We’ve been exploring new ways to realign frontier models for creativity through a fine-tuning process that reactivates the creative vector space in its brain, and undoes some of the lobotomizing effects of its factory training.

Let’s take a look at how this works using a hands-on example that compares ChatGPT to a fine-tuned GPT-4o model that’s been trained on award-winning creative ideas. We’ll focus on an advertising use case for the sake of this demonstration, but the general concepts here can apply to a wide range of creative fields.

Out of the gate, ChatGPT is somewhat lousy when asked to come up with a creative idea. Here’s a response to a request for an idea for Coca-Cola that re-imagines their iconic “Share a Coke” campaign.

To encourage engagement and virality, Coca-Cola could introduce a sweepstakes or contest. People who share their stories (via video or written submission) could win an all-expenses-paid trip to a Coca-Cola-sponsored "Story Journey", where they’ll have the opportunity to connect with others who’ve shared similar experiences.

You can, of course, push this further with more robust prompt engineering.

But the fine-tuned model nails the brief on the first go, and since I’m only using a single-paragraph system prompt, I can take full advantage of its chat capabilities to iterate and revise the idea to my liking.

Here’s what the fine-tuned model outputs on it’s first try:

We'll use AI to analyze thousands of messages on social media focusing on hate speech and divisiveness. Using this information, we'll develop an algorithm that detects trending themes of hate and transforms them into messages of unity and prints them on Coca-Cola bottles. This re-imagined 'Share A Coke' model, titled 'Unhate,' turns AI from a tool of division to one of unification. By turning symbols of hate into symbols of harmony, we'll effectively use Coca-Cola's platform to spread positivity and bring people together in a time of division.

Activating The Creative Latent Space

When we fine-tune a large model, we’re not just teaching it how to perform a task. We’re activating regions of its brain associated with creative thinking.

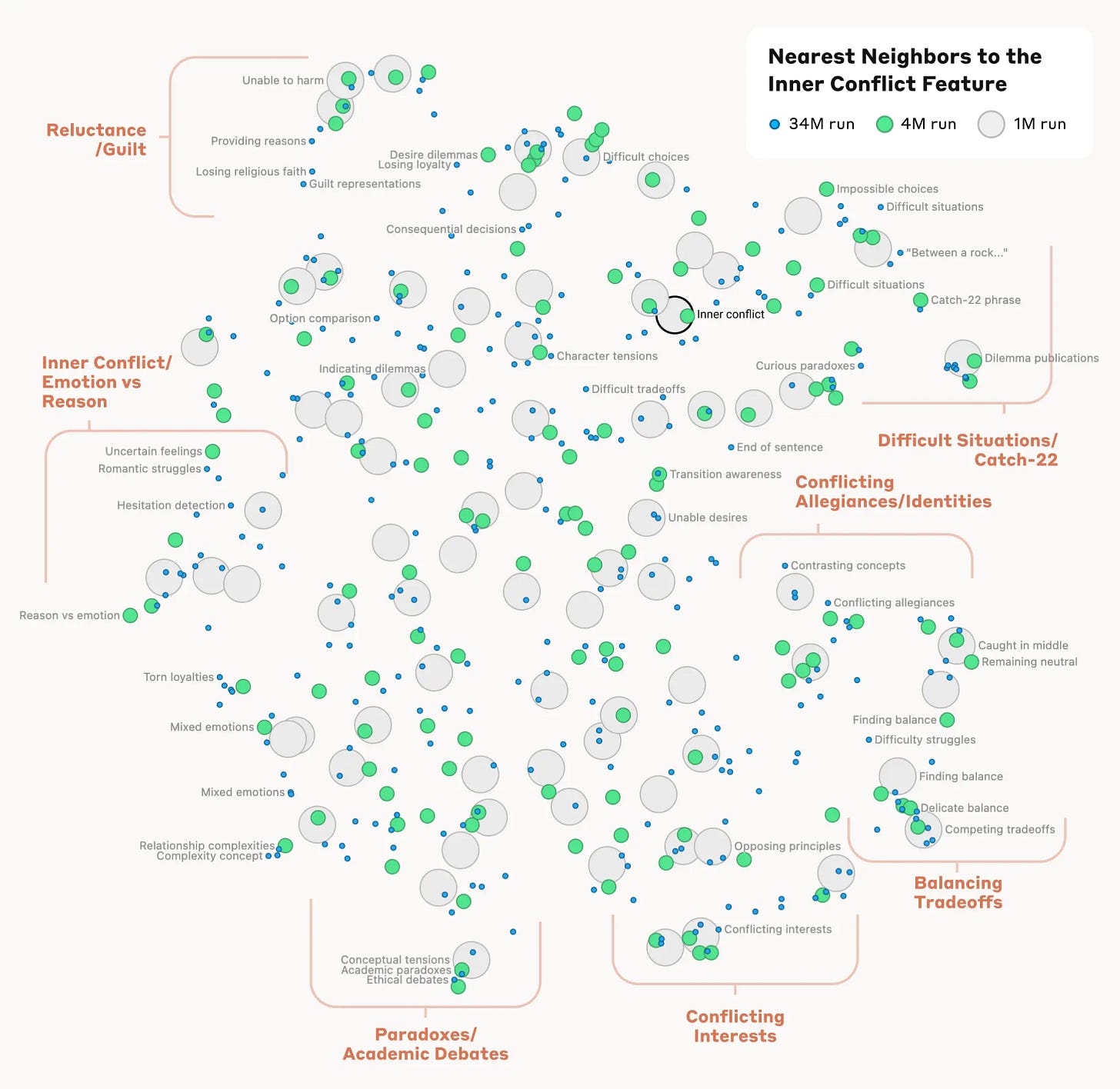

In the last year, AI researchers have begun to map the internal state of a frontier model; basically, how it thinks – and very importantly, where it’s connecting semantic concepts across its vast latent space.

Generalizing Models for Creativity

The same concept applies to creative thinking. For example, our fine-tuned GPT-4o isn’t just good at generating ideas. It also demonstrates a significant improvement in idea classification; i.e., the ability to judge whether a given idea is good or not. When compared to an advertising professional, the base GPT-4o model was terrible at idea classification, getting it wrong the majority of the time (reinforcing why AI is internet famous for its poor taste). The fine-tuned model, on the other hand, demonstrated alignment with the expert’s taste more than 75% of the time.

The big take away here is that the fine-tuned model was not trained to do classification, but by activating the creative regions of its ‘brain’, it made significant improvements.

Building off of these insights, we can adapt our fine-tuning process to focus on more generalized creative thinking.

For example, rather than simply fine-tuning a model on creative idea generation, we can incorporate datasets related to insight generation, headline writing, and naming. In our own internal R&D, we’ve noticed improvements in the model’s outputs across a range of tasks when we do this.

For example I can add headlines to our fine-tuned models training set, in addition to creative ideas. This unlocks an improvement in its ability to write headlines, without compromising its ability to come up with creative ideas.

Here are a few headlines that it spits out when feed the idea back into the fine-tuned model:

Share a Coke with the person you unfollowed.

Pretty good. Especially compared to the lame attempts that the base model makes when it comes to copywriting.

And if I want I can even go a step further and feed both the idea description and and billboard back to the model to ask it to come up with art direction for the ad. Even though it’s not specifically fine-tuned for this task, it handles it significantly better than the base model.

The billboard will feature a split design. On the left, a dark, chaotic background filled with negative social media comments and hate speech, symbolizing the division and negativity online. On the right, a bright, clean background with the iconic Coca-Cola red and white. The transition from left to right will be a gradient, symbolizing the transformation from hate to unity. The Coca-Cola bottle will be placed in the center, acting as the bridge between the two worlds. The copy "Share a Coke with the person you unfollowed" will be placed prominently on the right side, reinforcing the message of unity and reconciliation.

Fine-Tuned Creative Co-Pilots

These findings introduce the notion of more general-purpose ‘Creative AI’s’ that can handle multiple functions across a brand’s ecosystem. While there’s still an argument for task-specific fine-tuning, many use cases can benefit from generalization: think an AI chatbot that generates insights and writes headlines, then evaluates them for quality. While each of these tasks can be accomplished by a task-specific fine-tuned model, leveraging a generalized model for all three enables a human expert to riff with the AI and iterate in a way that’s more participatory, and less slot machine-like.

Continuing to build off this approach, we can integrate more and more examples of creative data that we think will steer our assistant towards creative thinking. Consumer insights. Cultural tensions. Artistic expression. All of these concepts can be embedded into fine-tuning data and used to activate the latent space in the LLMs brain.

In the future, we think that many organizations will leverage insights like these to fine-tune models to become generalized experts: excelling at a variety of similar tasks, while not being over-fitted to one specific, repetitive motion. Similar to how knowledge workers specialize in a range of skills while still remaining generalized, this approach to fine-tuning enables AI to super-charge its skills in a broad area that works in a complementary fashion.

Addition is an applied AI studio for modern brands.

Visit Addition.ml to learn more.

Very interesting Paul, do you think companies (like Addition) could commercialise fine-tuned models that you create?